Neural network pioneer Geoffrey Hinton, who quit Google in 2023 to warn of the risks of artificial intelligence, has elaborated on his p(doom) — the probability of catastrophic outcomes as a result of AI.

In a Q&A session with METR (a research non-profit that focuses on assessing AI risks), Hinton stated that he thinks the risk of “the existential threat” from AI is greater than 50%.

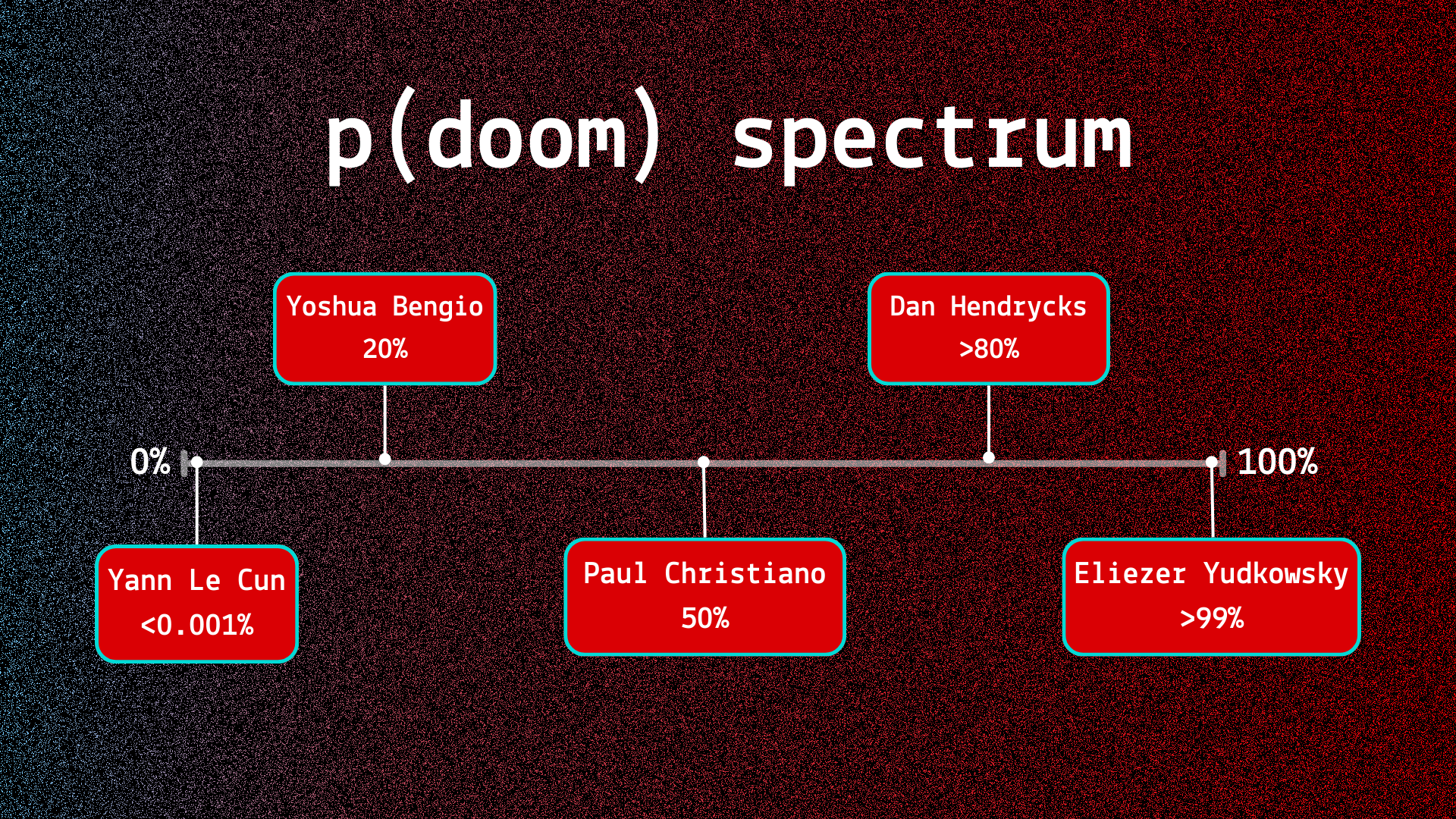

An audience member asked Hinton how he takes into account the hugely varying p(doom) estimates of different experts in the field, and what can be done to help build consensus on AI risk. On one end of the spectrum, Yann LeCun (chief AI scientist at Meta), is relatively unworried, and has argued that the chance of extinction from AI is “less likely than an asteroid wiping us out”.

At the other extreme, figures such as Roman Yampolskiy and Eliezer Yudkowsky have both given estimates of over 99%, with the former comparing the prospect of humans controlling superintelligent AI to that of an ant controlling the outcome of a football match being played around it.

Given the huge variance, Hinton questioned the validity of extreme p(doom) estimates on both ends of the spectrum, calling them “insane”.

“if you think it’s zero, and somebody else thinks it’s 10%, you should at least think it’s like 1%”.

This reasoning is what leads Hinton to lower his personal estimate of 50% to 10-20% after taking into account the opinions of others, a range which includes the mean response of AI researchers in a 2023 survey (14.4%, N=2778).

Earlier, at the same event, Hinton suggested that reinforcement learning from human feedback (RLHF), a technique used by the likes of OpenAI to attempt to align their models with human preferences, was a “pile of crap”, and as such, his p(misalignment) was “pretty high”.

After leaving Google in order to speak freely about AI risk, Hinton joined fellow “Godfather of deep learning” Yoshua Bengio, in signing the one-sentence 2023 open letter from the Center for AI Safety, which simply read:

Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.

Hinton fell short of signing the Future of Life Institute’s letter calling for a six-month pause on training models more powerful than GPT-4, but did release a paper with Bengio, Stuart Russell, and more, urging governments to address the extreme risks posed by AI.